k8s 1.17.5高可用部署

k8s 1.17.5高可用部署

环境准备

K8S版本: 1.17.5

| IP | 操作系统 | 主机配置 | 备注 |

|---|---|---|---|

| 192.168.0.2 | debian9.5 | 4核8G | control plane(apiserver/scheduler/controller/etcd) |

| 192.168.0.3 | debian9.5 | 4核8G | control plane(apiserver/scheduler/controller/etcd) |

| 192.168.0.4 | debian9.5 | 4核8G | control plane(apiserver/scheduler/controller/etcd) |

| 192.168.0.5 | debian9.5 | 4核8G | node |

| 192.168.0.6 | debian9.5 | 4核8G | node |

| 192.168.0.7 | debian9.5 | 4核8G | node |

| 59.111.230.7 | LB | X | 负载均衡, 后端为控制节点(可换为keepalived VIP) |

机器初始化(所有节点操作)

- 使用aliyun debian源

- 部署docker 18.09.9 (https://docs.docker.com/engine/install/debian/)

- 部署kubectl kubeadm kubelet

系统初始化, 关闭swap、使能ip foward等

#!/bin/bash curl -fsSL https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add - cat <<EOF >/etc/apt/sources.list.d/kubernetes.list deb http://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main EOF apt-get update apt-get install -y kubelet=1.17.5-00 kubeadm=1.17.5-00 kubectl=1.17.5-00 modprobe br_netfilter echo '1' > /proc/sys/net/bridge/bridge-nf-call-iptables echo '1' > /proc/sys/net/ipv4/ip_forward swapoff -a

master节点初始化

kubeadm 初始化配置文件kubeadm-config.yaml如下:

apiVersion: kubeadm.k8s.io/v1beta2 kind: ClusterConfiguration imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers kubernetesVersion: v1.17.5 # k8s版本 apiServer: certSANs: # apiserver的节点ip、vip - 192.168.0.2 - 192.168.0.5 - 192.168.0.6 - 59.111.230.7 controlPlaneEndpoint: "59.111.230.7:6443" networking: podSubnet: "10.244.0.0/16"kubeadm 初始化集群

kubeadm init –config=kubeadm-config.yaml|tee kubeadim-init.log

记录输出master和node加入命令

You can now join any number of control-plane nodes by copying certificate authorities and service account keys on each node and then running the following as root: kubeadm join 59.111.230.7:6443 --token 876e54.j6so6f38paqd8wq2 \ --discovery-token-ca-cert-hash sha256:262e95150dc375ec0992f7cd8297dc6e7019af14175039eabbd3d7e631ec594e \ --control-plane Then you can join any number of worker nodes by running the following on each as root: kubeadm join 59.111.230.7:6443 --token 876e54.j6so6f38paqd8wq2 \ --discovery-token-ca-cert-hash sha256:262e95150dc375ec0992f7cd8297dc6e7019af14175039eabbd3d7e631ec594e

网络安装(选flannel安装)

flannel

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.ymlcalico

kubectl create -f https://docs.projectcalico.org/manifests/tigera-operator.yaml kubectl create -f https://docs.projectcalico.org/manifests/custom-resources.yaml 参考 https://docs.projectcalico.org/getting-started/kubernetes/quickstart

master、node节点加入

master节点加入需先拷贝证书

master_hosts 192.168.0.3 192.168.0.4 --- pscp -p 20 -h master_hosts -O "StrictHostKeyChecking=no" /etc/kubernetes/pki/ca.crt /etc/kubernetes/pki/ca.crt pscp -p 20 -h master_hosts -O "StrictHostKeyChecking=no" /etc/kubernetes/pki/ca.key /etc/kubernetes/pki/ca.key pscp -p 20 -h master_hosts -O "StrictHostKeyChecking=no" /etc/kubernetes/pki/sa.key /etc/kubernetes/pki/sa.key pscp -p 20 -h master_hosts -O "StrictHostKeyChecking=no" /etc/kubernetes/pki/sa.pub /etc/kubernetes/pki/sa.pub pscp -p 20 -h master_hosts -O "StrictHostKeyChecking=no" /etc/kubernetes/pki/front-proxy-ca.crt /etc/kubernetes/pki/front-proxy-ca.crt pscp -p 20 -h master_hosts -O "StrictHostKeyChecking=no" /etc/kubernetes/pki/front-proxy-ca.key /etc/kubernetes/pki/front-proxy-ca.key pscp -p 20 -h master_hosts -O "StrictHostKeyChecking=no" /etc/kubernetes/pki/etcd/ca.crt /etc/kubernetes/pki/etcd/ca.crt pscp -p 20 -h master_hosts -O "StrictHostKeyChecking=no" /etc/kubernetes/pki/etcd/ca.key /etc/kubernetes/pki/etcd/ca.key kubeadm join 59.111.230.7:6443 --token 876e54.j6so6f38paqd8wq2 \ --discovery-token-ca-cert-hash sha256:262e95150dc375ec0992f7cd8297dc6e7019af14175039eabbd3d7e631ec594e \ --control-planenode节点可直接加入

kubeadm join 59.111.230.7:6443 --token 876e54.j6so6f38paqd8wq2 \ --discovery-token-ca-cert-hash sha256:262e95150dc375ec0992f7cd8297dc6e7019af14175039eabbd3d7e631ec594e

问题记录

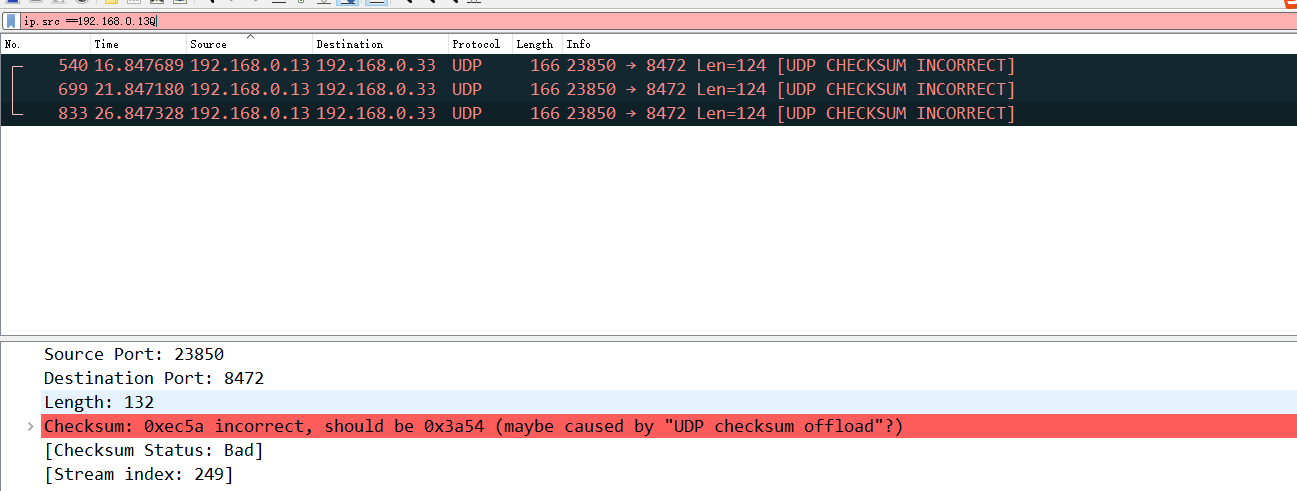

DNS域名解析失败(k8s+flannel DNS解析失败)

hostNetwork: true dnsPolicy: ClusterFirstWithHostNet

原因是: DNS请求的UDP报文checksum error被丢弃导致的

深层原因: 内核一个bug https://github.com/torvalds/linux/commit/ea64d8d6c675c0bb712689b13810301de9d8f77a

解决方案1

关闭tx checksum offload

ethtool -K flannel.1 tx-checksum-ip-generic off

解决方案2:

更新kube-proxy版本可以规避这个问题: kube-proxy-amd64:v1.17.9

新的规则:

-A POSTROUTING -m comment --comment "kubernetes postrouting rules" -j KUBE-POSTROUTING

-A KUBE-POSTROUTING -m mark ! --mark 0x4000/0x4000 -j RETURN

-A KUBE-POSTROUTING -j MARK --set-xmark 0x4000/0x0

-A KUBE-POSTROUTING -m comment --comment "kubernetes service traffic requiring SNAT" -j MASQUERADE